Left is Subtract.

Right is Add!

Adaptive design for adding and subtracting using a number line.

PRODUCT IMPROVEMENTS

Prologue

As part of our product improvement process, we regularly analyze large-scale data from active Frax users. These insights reveal how each game performs and how effectively it meets its pedagogical goals—within specific Units and across the broader Sector.

This case study highlights my adaptive game design and data-driven solutions.

EXPERTISE APPLIED

UI Pattern Refinement

Instructional Design

Gameplay Data Insights

Product Iteration

Adaptive Game Design

Design Strategy

Playtesting Synthesis

CRAFTING TOOLS

Teams

Speakflow

Slack

Figma

SVN

Google Suite

LOSS OF ACCURACY

The Core Conflict

While some variability in student accuracy is expected as new concepts are introduced, our data revealed a consistent and atypical trend: a sharp decline in accuracy at a specific point in the game progression, followed by sustained underperformance.

Rather than the usual ebb and flow that allows for small-scale tuning, this pattern pointed to a deeper structural issue within the experience.

This finding prompted a focused investigation to understand the drop's root cause and identify design changes that would better support our learners.

Expected Variability in Question Accuracy

Concerning Variability in Question Accuracy

UI Challenges

INVESTIGATING PAIN POINTS

Our investigation surfaced two key interaction-level issues that contributed to student struggle—both subtle in design, but significant in impact.

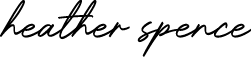

Clearing Correct Answers Too Soon

Initially, the game was designed to clear all parts of a student’s input if any part of the answer was incorrect. The intent was to encourage students to reset and rethink their full response. However, the analysis revealed an unintended side effect: on subsequent attempts, students often changed portions of their answers that were previously correct.

In one notable pattern, over 23% of students who originally submitted a mixed number with a numerator error went on to introduce a whole number error—changing a correct value to an incorrect one.

Ultimately, this input-clearing behavior undermined partial understanding and increased error rates instead of supporting recovery.

Flat Feedback and Missed Scaffolding

Another UI challenge emerged in our error feedback scaffolding. For the first three incorrect attempts, the only on-screen change was the clearing of the input fields, with no other visible differentiation between try one and try three.

As a result, students often failed to notice when the feedback dialogue updated to display the correct answer after multiple failed attempts.

Even with the correct answer now visible, nearly 50% of students still submitted another incorrect response—a clear indication that visual feedback was too subtle and easily overlooked.

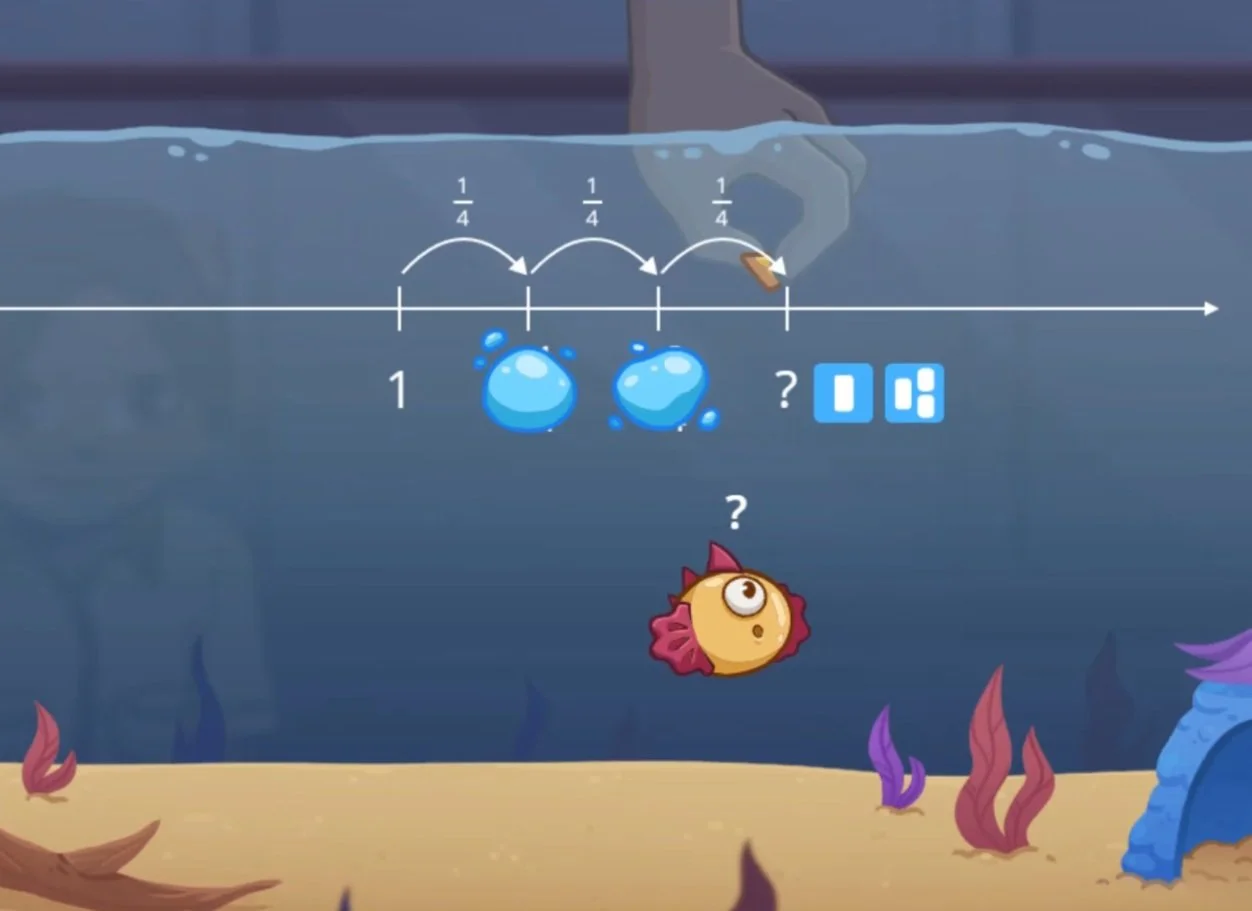

Progression & Pedagogy Mismatch

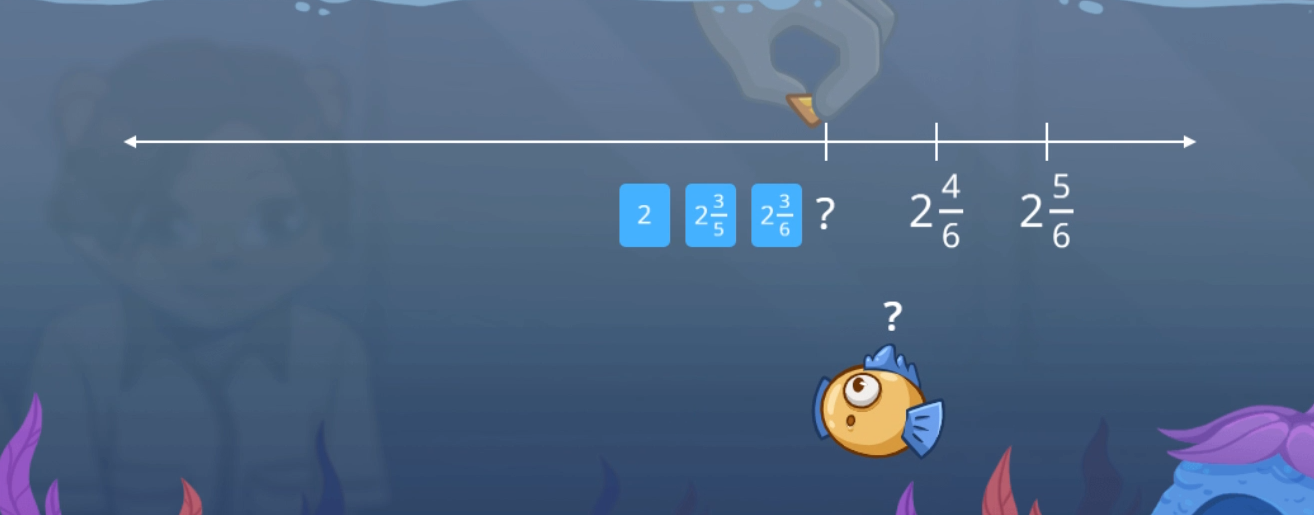

Beyond interface friction, our analysis uncovered deeper misalignments between the game’s instructional progression and students’ conceptual readiness. Two core issues emerged that contributed to the sustained drop in performance.

Whole Number Change Weaknesses

A persistent struggle across the game was students’ difficulty recognizing when and how the whole number value changed as they progressed along the number line. While the game transitioned rapidly from benchmark fractions to more complex mixed-number targets, students were simultaneously asked to select the correct answer format—fraction vs. mixed number—and then immediately construct the full mixed number.

This layered cognitive demand came before students had sufficient early-level exposure to whole number transitions. Without structured scaffolding in the early levels, the concept of whole number change remained fragile, creating a foundation-level gap that affected performance throughout each instance of the game.

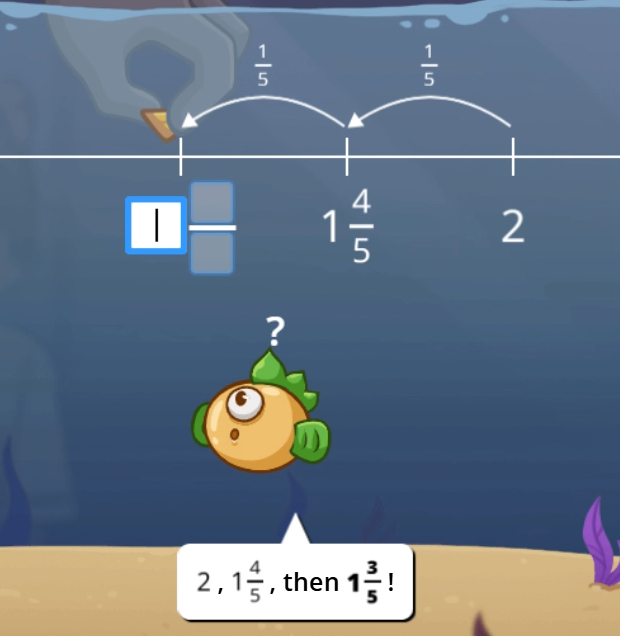

Addition Question Accuracy

NO whole number change

Whole number change

Subtraction Question Accuracy

NO whole number change

Whole number change

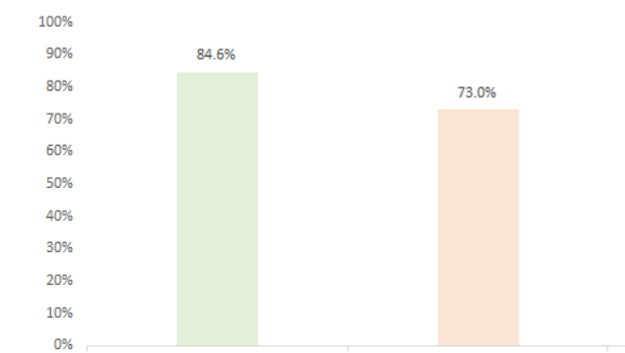

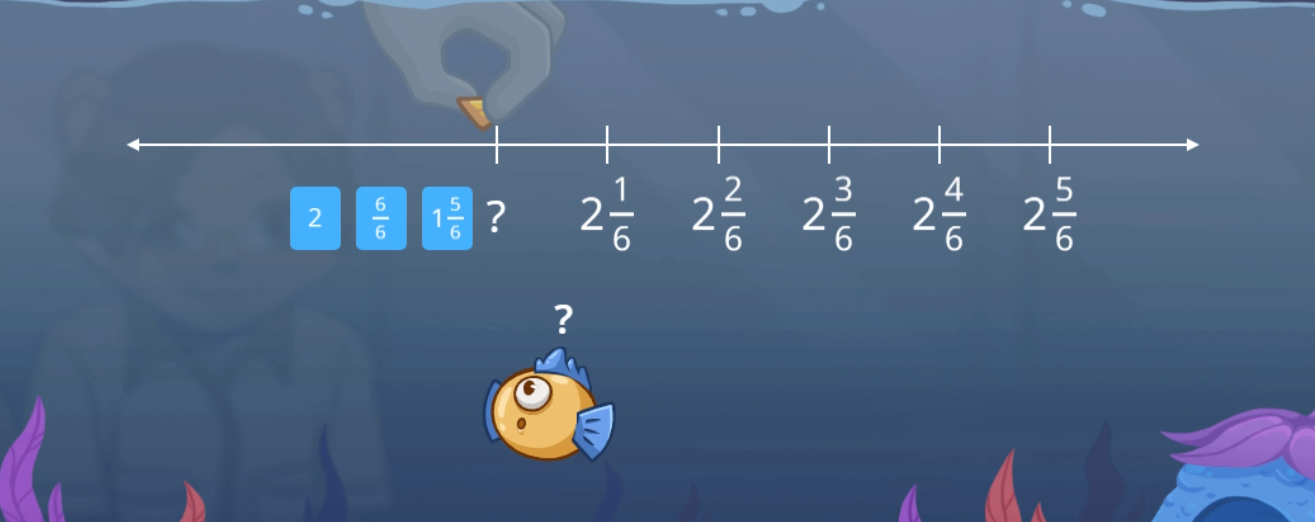

Unconditional Progression to Covered Labels

The second challenge involved our use of covered number line labels as a universal progression step. Regardless of performance, all students were required to engage with number lines that had one or two obscured tick marks.

The pedagogical intent was to help learners develop more intuitive number sense—such as reasoning that “I started at 1 1/3, and added 2/3, so I must now be at 2”—without relying on counting every mark. However, when introduced too early, and combined with weak whole number change understanding and limited feedback signaling, this step became a point of cognitive overload.

This contributed directly to declines in first-try accuracy and reduced student confidence during the latter half of each progression.

Question Accuracy - No Obscured Labels

Question Accuracy - Obscured Labels

These changes turned a rigid progression into an adaptive learning environment—one that meets students where they are and moves with them, not ahead of them.

CRAFTING SOLUTIONS

World Builder

In response to the core challenges, I designed a dynamic progression system capable of adapting in real time to student performance. At the heart of this redesign was a weighted logic model—a system that interprets the type and frequency of student struggle to drive individualized game paths.

Key struggle indicators—such as specific error types, repeated incorrect attempts, and whether support scaffolds (like the correct answer) had already been shown—were each assigned different weights. These inputs shaped both the pacing and complexity of each student’s experience, allowing the game to respond with targeted adjustments rather than a one-size-fits-all sequence.

One major outcome of this system was a shift in how obscured labels were handled. Previously required for all students, these challenges are now treated as stretch goals, surfaced only when performance data indicates readiness. Similarly, we removed covered-label scenarios that mirrored lines students would later draw themselves, eliminating tasks that didn’t offer meaningful learning value.

In tandem with progression changes, I introduced a simplified interface path to reduce friction and cognitive load. This included button-based inputs in place of numeric entry and easier denominator sets (e.g., thirds over sixths) for students demonstrating early struggle—creating a smoother re-entry point without breaking learning continuity.

This adaptive redesign ensures that each learner can stay engaged, make progress, and encounter productive challenge—without being derailed by early friction or fragile understanding.

LESSONS LEARNED

The Road Ahead

Future Considerations

A student-facing survey to better understand learners’ perceptions of difficulty and feedback clarity. This would provide richer, qualitative signals to complement gameplay data.

More nuanced audio or visual scaffolds to reinforce key strategy cues while avoiding task fatigue and without overwhelming cognitive load.

Telling the Story

This project also created opportunities for broader cross-department collaboration. I partnered with our marketing team to film a video on adaptive learning design—an opportunity to share complex systems work with a wider audience and expand my storytelling skills.